The development and implementation of any technology that opens new frontiers involves the transition from concept to prototype to minimum viable product, culminating in a scalable system with desired functionality. Early developments offer glimpses of the potential, while the broader achievement of scalable capacity unlocks true commercial value. These earlier stages of theory and possibility are essential for understanding what is necessary to progress to inevitability.

There are many examples of technological development from theory to commercial reality—of which air travel is one. Prior to 1903, the goal of sustained human flight was theoretical. From watching birds, bats and bugs, humans had long known flight was possible, but machines modelled on their wings (most famously done by da Vinci) proved unsuccessful for centuries. A shift to a fixed wing approach brought with it physical models that demonstrated aircraft flight was possible, but these models lacked a power source. The Wright brothers’ first 12 second flight proved that human flight via heavier-than-air vehicles was possible and, years later, improvements were made to aircraft that allowed sustained transatlantic passenger flight, initiating a global inevitability. However, it wasn’t until the development of jet engines, pressurized fuselages, and a network of international airports, that long distance, reliable, profitable transport of people and cargo by air became a reality.

Quantum computing, by commercializing a branch of physics, is following a similar, phased, development trajectory. What is especially exciting in the field now is the building momentum. In the past decades, quantum computing has moved from theory to possibility, and at Photonic, we’re focused on making distributed, fault-tolerant, quantum computing a global inevitability.

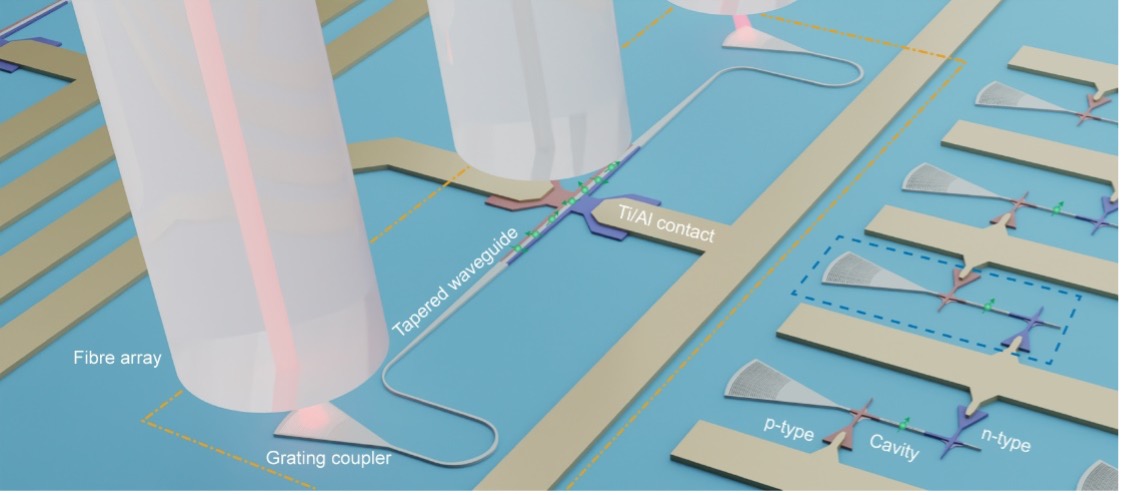

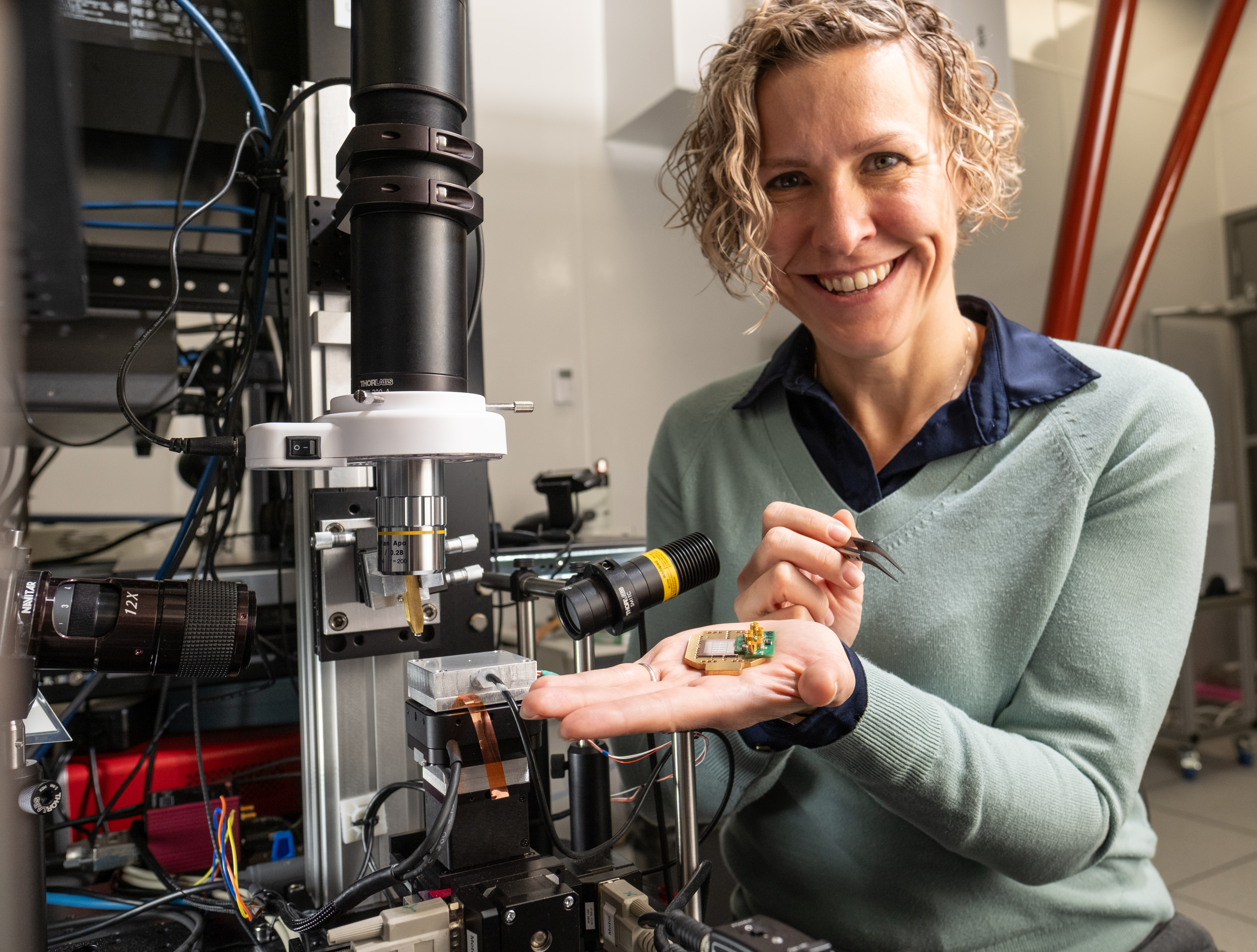

Photonic’s approach is a combined technological platform for both quantum computing and networking; our architecture is optimized for entanglement distribution and leverages colour centre spins in silicon (T centres). Using low-overhead quantum error correction, and with a native optical interface that enables high performance non-local operations, silicon T centres can drastically accelerate the timeline for realizing modular, scalable, fault-tolerant quantum processors and repeaters. Photonic has been relentlessly pursuing (and achieving!) the technological milestones required for this end goal.

Photonic’s Quantum Approach: The Path to Possible

Why do we place such importance on having a platform based on spins in silicon with a native optical interface, that can serve as a combined platform for both fault-tolerant quantum computing and quantum networking? Because we know that without the ability to reliably scale and distribute entanglement, the full value of quantum computing can’t be realized. A silicon-based platform allows us to leverage years of development to enable scalability of high-performance chips, and the optical interface allows us to plug into a system of optical interconnects to distribute entanglement between chips, leading to a fully integrated system based on research and tested technologies with years of optimization and engineering from other industries.

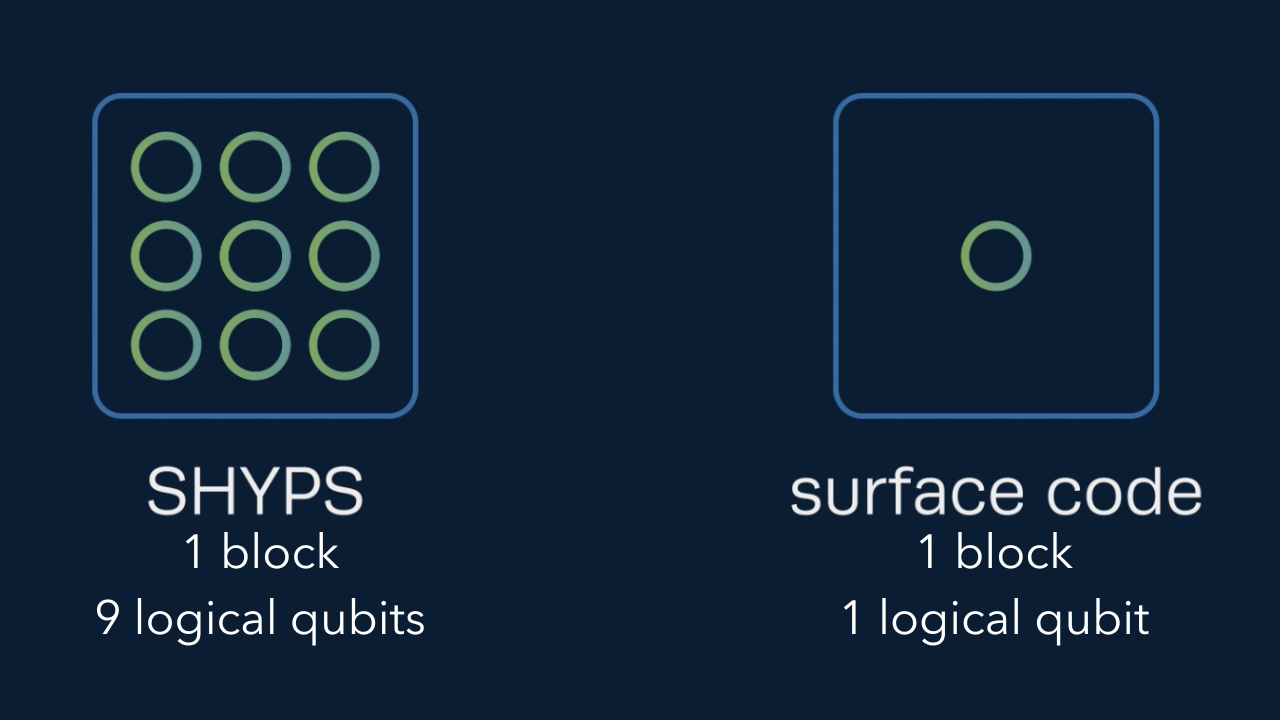

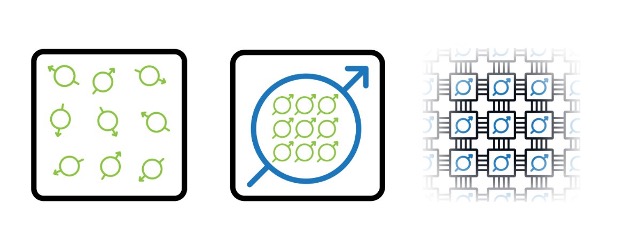

Those familiar with quantum computing will know the path to scale is typically broken out into three phases, and that there exists variation in both naming (phases, levels, stages) and the specifics of each step. Photonic’s focus is, as it has always been, on quantum computing for commercial relevance, which we see unfolding with the availability of large numbers of logical qubits in the third phase. The Photonic approach to scale is distributed, where thousands of logical qubits can be networked across modules. Along the way to this point are these important developmental steps:

- NISQ (“noisy intermediate-scale quantum”): Dominated by the production of small numbers of qubits out of a variety of physical phenomena, NISQ prototypes were single module devices containing qubits that were too few or too noisy to implement quantum error correction effectively.

- Small-Scale Logical Qubits: In this phase, the introduction of error correction and resultant fault tolerance has brought us to our current state, with increases in the number and quality of logical qubits within a single computing module.

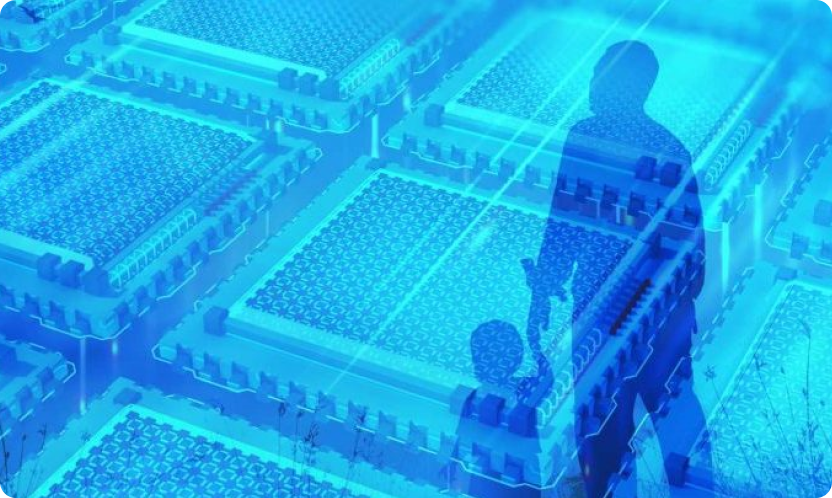

- Large-Scale Networked Logical Qubits: Complex quantum computations that require many logical qubits will be possible as systems will have efficient means of utilizing large numbers of identical, manufacturable, and high-quality logical qubits.

NISQ machines do not have enough high-quality qubits to reliably run algorithms that provide sufficient advantages over classical supercomputers. Specifically, they do not have the capacity to provide the required number or quality of qubits for quantum error correction, and without that capability, lack a path to delivering a system that is appreciably better than what can be achieved with classical systems. While the work done has been instrumental in exploring the potential of quantum computing, it hasn’t yielded any significant commercially relevant use cases to date.

Presently we are just seeing the start of very active error corrected quantum demonstrations. Small-scale fault tolerant qubits have relatively recently become a reality. We are in the ‘pre-dominant design explosion’ of methods as demonstrated by the diversity of approaches in the quantum computing ecosystem. We will see single quantum modules demonstrate quantum error correction (QEC) protocols such as surface code or QLPDC codes. There may be useful scientific results to emerge from these computers with small numbers of logical qubits, however, the known high-value use cases of commercial relevance (e.g. Shor’s algorithm) require more qubits.

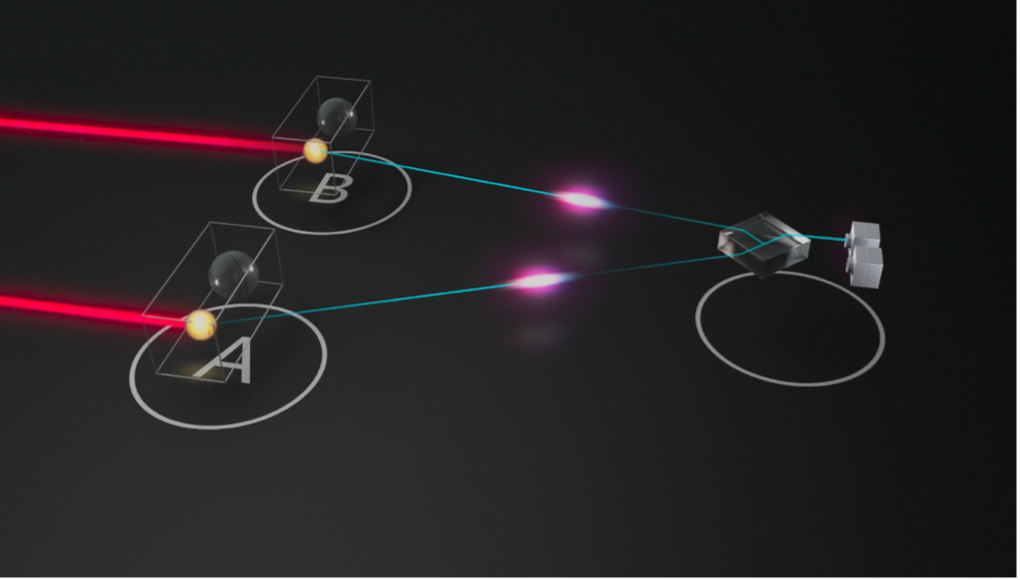

It is with the arrival of large-scale quantum computing that the industry will see systems capable of implementing algorithms that provide exponential speedups for tackling complex computational challenges and market demand for high-value, high-impact use cases such as drug discovery, materials science, and catalyst development. One approach to effective performance at this scale is to design quantum platforms to work in a distributed, networked manner, with operations acting within and between modules. Operations that act on more than one module consume distributed entanglement. Commercially viable modular quantum platforms will need to be able to distribute entanglement at a rate that meets or exceeds the needed amount to avoid bottlenecks in computation time. High connectivity allows for the distribution of entanglement directly to where it’s needed rather than taking a winding route through multiple connections (akin to flying direct rather than having multiple stopovers). So, a critical technical consideration for distributed quantum computing is the total entanglement distribution bandwidth between modules.

Inevitable Progress to Scale and Beyond

At Photonic, we’ve been working from a quantum systems engineering perspective, starting with what a scalable, distributed, quantum computing network would require. We’ve focused on these requirements (e.g., telecom connectivity, data centre compatibility) to build a platform to accommodate both performance of the individual computing module and the scalability of the network.

Our architecture is designed to enable horizontal scalability while optimizing for entanglement distribution, harnessing the potential of silicon colour centres. The telecom, silicon-integrated approach we have taken at Photonic has intrinsic capabilities in this regard, as colour centres (photons and spins) are extremely promising for high-fidelity operation, while high connectivity brings fault tolerance within reach with low overheads.

Our native optical interface produces entangled photons that can be used to distribute entanglement at telecom wavelength so that no transduction is required to use optical interconnects between modules. The additional benefit of operating at telecom wavelengths is the ability to integrate with existing infrastructure – namely optical switch networks that have been developed for telecommunications. As such, Photonic’s architecture is well suited to quickly and reliably execute large-scale algorithms across multiple modules using a fast, reliable, quantum network.

Photonic’s strategy lies in taking the most direct path to realizing the true potential of large-scale, distributed, fault-tolerant systems for commercially relevant quantum computing applications.

- As the interest in NISQ-based tech declines, we’re seeing a highly competitive race to deliver commercial value, especially in the form of logical qubit development. There will increasingly be expectations for fault-tolerant qubits, and the appetite for them will grow exponentially.

- Many companies and researchers are making great progress in small scale fault tolerant prototypes. Each milestone reached benefits the industry as a whole. We’re all able to learn from innovations in materials, qubits, and better understand the strengths and limits of various approaches.

- There is ever increasing desire to get to a stage where the key, known, commercially valuable algorithms are implementable; on our path, this necessitates a functional, modular, system. At this stage, we anticipate platforms capable of distributed quantum computing will thrive. We are encouraged to see the industry also acknowledging the necessity of interconnected quantum modules. A recent Global Quantum Intelligence Outlook Report on Scalable Quantum Hardware released the following conclusion:

The report highlights the necessity for a modular approach to scaling in nearly all proposed quantum computing architectures. This modular approach, which emphasizes distributed rather than monolithic quantum computing stacks, offers not only scalability but also flexibility, maintainability, and redundancy. It also emphasizes how most architectures will ultimately need to leverage interconnects, and how performant optical photonic interconnects hold the promise of synergies in quantum communications and networking.

We cannot overstate how exciting it is to be on the cusp of technological advancements that will have impacts that we can’t yet fully fathom. Just as the Wright brothers and others at the earliest frontiers of flight were likely not able to consider the range of applications from specialized water bombers to fighter jets to planes capable of carrying 250 tons of cargo or conducting edge of space flights, our understanding of the potential of quantum computing is just beginning. From catalyst discovery for new fuels, materials science implication for solar energy capture and battery development, and pharmaceutical design for medical advancement, there are seemingly endless possibilities. We are dedicated to developing quantum computing and networking capacity to unleash the maximum potential for doing good in the world.